The Rise of Hyperreal Fakes: Can We Still Trust What We See?

We’re entering an era where seeing isn’t believing. Artificial intelligence has rapidly advanced to the point where it can generate images of faces so realistic they can fool the human eye – and even experts. A recent study from the University of Leeds highlights just how challenging it’s becoming to distinguish between real people and AI-generated personas, but also offers a glimmer of hope: targeted training can significantly improve our detection skills.

The Super-Recognizer Advantage

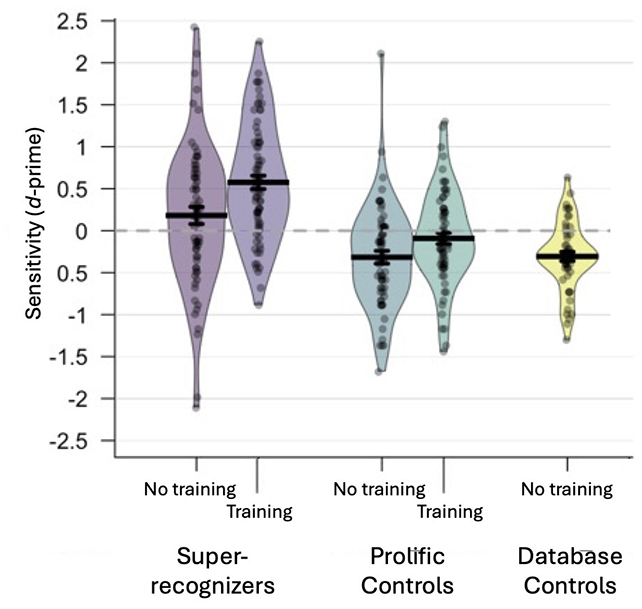

The study, published in Royal Society Open Science, pitted “super-recognizers” – individuals with exceptional face recognition abilities – against those with typical skills. Both groups struggled to identify AI-generated faces, but as expected, the super-recognizers performed better, correctly identifying fakes 41% of the time compared to 31% for the control group. This underscores the inherent difficulty even for those naturally gifted at facial recognition.

However, the most encouraging finding was the impact of just five minutes of training. Participants were shown examples of common AI artifacts – subtle imperfections like missing teeth or blurring around hair and skin – and taught what to look for. This brief intervention boosted the accuracy of typical recognizers to 51% (essentially random chance), while super-recognizers jumped to a remarkable 64%.

Beyond Spotting Fakes: The Future of AI Face Detection

This research isn’t just about identifying fake faces; it’s about understanding the evolving landscape of digital deception. The technology behind these images, typically Generative Adversarial Networks (GANs), is constantly improving. GANs pit two AI algorithms against each other – one creating images, the other evaluating their realism – resulting in a continuous refinement process. This means AI-generated faces will only become more convincing.

Did you know? The first GANs, developed in 2014, produced blurry, distorted images. Today’s models can generate photorealistic faces with incredible detail.

The implications are far-reaching. From sophisticated phishing scams and fake social media profiles to the potential for manipulating evidence and spreading disinformation, the misuse of AI-generated faces poses a significant threat. Consider the recent rise in “deepfake” videos, where a person’s likeness is swapped onto another’s body – a technology that relies on similar AI principles.

Emerging Trends and Countermeasures

Several key trends are shaping the future of AI face detection:

- AI-Powered Detection Tools: Companies are developing AI algorithms specifically designed to identify AI-generated images. These tools analyze images for subtle inconsistencies and artifacts that humans might miss. Sensity AI, for example, focuses on detecting deepfakes and manipulated media.

- Biometric Watermarking: Embedding imperceptible digital watermarks into images and videos could help verify their authenticity. This technology is still in its early stages but holds promise.

- Blockchain Verification: Utilizing blockchain technology to create a tamper-proof record of an image’s origin and modifications could provide a reliable way to confirm its authenticity.

- Neuromorphic Computing: Inspired by the human brain, neuromorphic chips could offer a more efficient and accurate way to process visual information and detect subtle anomalies in AI-generated images.

- Enhanced Training Programs: Building on the Leeds study, more sophisticated training programs will be crucial for equipping individuals with the skills to identify AI fakes. These programs could be integrated into cybersecurity training, media literacy education, and even everyday online safety courses.

Pro Tip: Pay attention to details. Look for inconsistencies in lighting, shadows, and textures. Check for unnatural symmetry or blurring around the edges of the face. Reverse image search can also help determine if an image has been altered or is being used without permission.

The Role of Super-Recognizers in a Digital World

The study’s findings suggest that leveraging the skills of super-recognizers could be a valuable asset in combating digital deception. These individuals could be employed by law enforcement agencies, social media platforms, and security firms to verify identities and detect fraudulent activity. However, it’s important to note that even super-recognizers are not infallible, and training remains essential.

FAQ: AI-Generated Faces

- Q: How realistic are AI-generated faces now?

A: They are becoming incredibly realistic, often indistinguishable from real faces to the untrained eye. - Q: Can AI detect AI?

A: Yes, AI-powered detection tools are being developed, but they are constantly playing catch-up with the advancements in image generation technology. - Q: What can I do to protect myself from AI-generated scams?

A: Be skeptical of online profiles, verify information through multiple sources, and be cautious about sharing personal information. - Q: Is there a way to tell if a video is a deepfake?

A: Look for inconsistencies in blinking, lip syncing, and facial expressions. Also, pay attention to the quality of the video and any unnatural blurring or distortions.

The ability to create convincing fake faces is a powerful technology with the potential for both good and harm. As AI continues to evolve, it’s crucial that we develop the tools and skills necessary to navigate this increasingly complex digital landscape. Staying informed, being critical of what we see online, and supporting the development of robust detection technologies are essential steps in protecting ourselves from the risks of hyperreal fakes.

What are your thoughts on the rise of AI-generated faces? Share your concerns and ideas in the comments below!

Explore more articles on AI and cybersecurity here.

Subscribe to our newsletter for the latest updates on emerging technologies and digital security.