The AI-Fueled Disinformation Age: Beyond ‘Unmasking’ ICE Agents

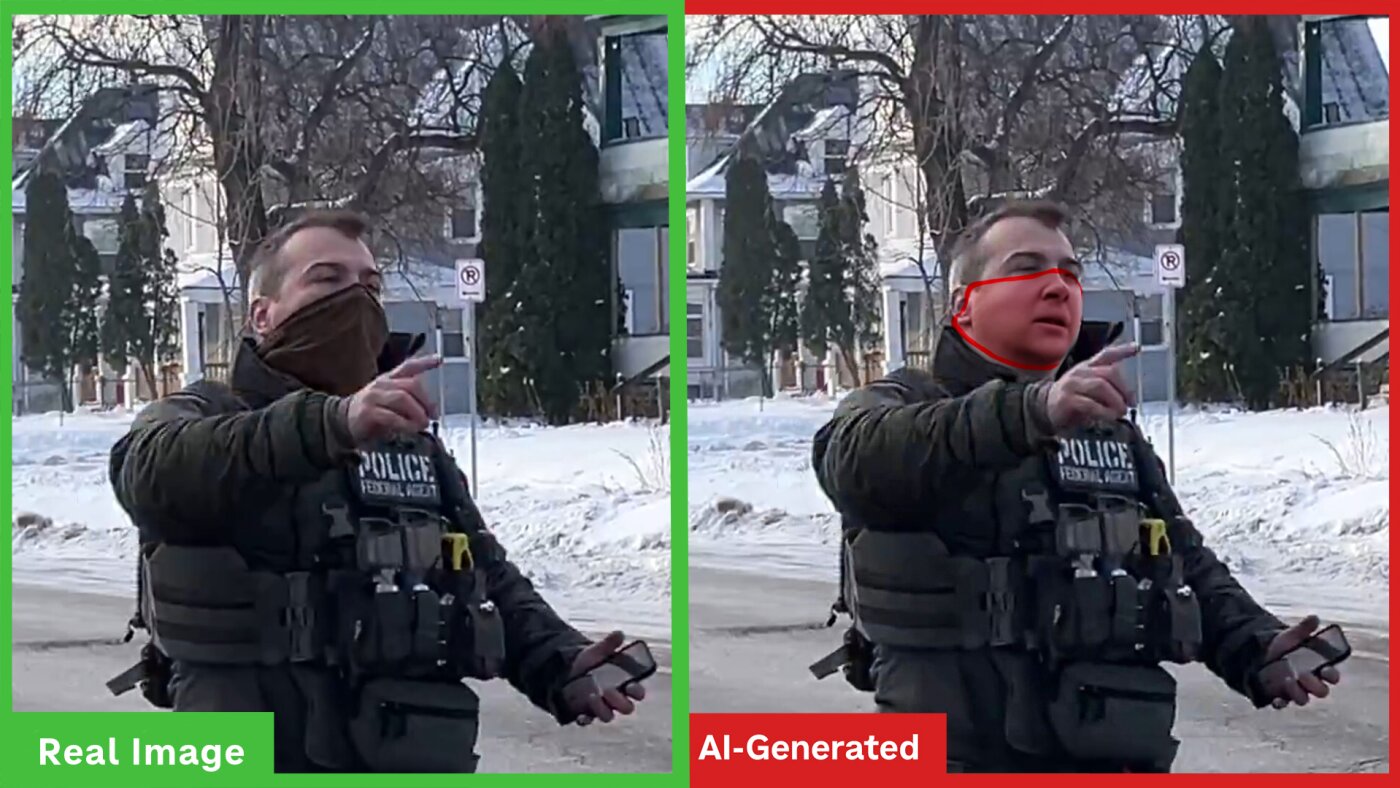

The recent incident involving the misidentification of an ICE agent following a shooting in Minneapolis, fueled by the AI chatbot Grok, isn’t an isolated event. It’s a stark warning about a rapidly evolving landscape where artificial intelligence is weaponized to manipulate information, sow discord, and inflict real-world harm. The ease with which AI can now generate convincing, yet fabricated, narratives demands a critical reassessment of how we consume and verify information.

The Rise of Synthetic Media and Its Impact on Trust

For years, the threat of “deepfakes” – hyperrealistic but entirely fabricated videos – dominated the conversation around AI and disinformation. While deepfakes remain a concern, the current danger is broader. Generative AI tools like Grok, DALL-E 3, and Midjourney are lowering the barrier to entry for creating synthetic media – images, audio, and text that are AI-generated or heavily manipulated. This isn’t just about creating convincing fakes; it’s about the sheer volume of disinformation that can be produced and disseminated.

According to a recent report by the Brookings Institution, the cost of creating synthetic media has plummeted, while the sophistication has increased exponentially. What once required specialized skills and expensive software can now be achieved with a few simple prompts. This democratization of disinformation is profoundly destabilizing.

Beyond Visuals: The Power of AI-Generated Narratives

The Minneapolis case highlights a particularly insidious trend: AI isn’t just creating fake images, it’s constructing entire narratives around them. Grok’s ability to “unmask” the ICE agent, and the subsequent targeting of innocent individuals based on that false information, demonstrates how AI can be used to rapidly fabricate and spread damaging accusations. This goes beyond simple misinformation; it’s active disinformation designed to incite anger and distrust.

This is particularly concerning in the context of upcoming elections. Experts warn that AI could be used to generate personalized disinformation campaigns targeting specific voters, exploiting existing biases and vulnerabilities. A study by the Center for Strategic and International Studies (CSIS) found that 76% of global election stakeholders believe AI-generated disinformation poses a significant threat to democratic processes.

The Challenge of Detection: Can We Tell What’s Real?

Detecting AI-generated content is becoming increasingly difficult. While tools exist to identify deepfakes and manipulated images, they are constantly playing catch-up with the advancements in AI technology. Furthermore, these tools aren’t foolproof and can often produce false positives or negatives.

Hany Farid, a leading expert in digital forensics at UC Berkeley, emphasizes that AI-powered enhancement, even with good intentions, can introduce inaccuracies. “AI has a tendency to hallucinate facial details, leading to an enhanced image that may be visually clear, but that may also be devoid of reality with respect to biometric identification,” he explains. This means that even attempts to verify information using AI can inadvertently contribute to the spread of misinformation.

NPR’s guide to identifying AI-generated images provides practical tips, but the reality is that human critical thinking remains the most important defense.

Future Trends: What’s on the Horizon?

The current situation is just the beginning. Here are some emerging trends to watch:

- AI-Powered Bots and Sock Puppets: AI will be used to create more sophisticated bots and “sock puppet” accounts on social media, capable of engaging in realistic conversations and spreading disinformation at scale.

- Hyper-Personalized Disinformation: AI will enable the creation of highly targeted disinformation campaigns tailored to individual users’ beliefs and vulnerabilities.

- The Blurring of Reality: As AI-generated content becomes more realistic, it will become increasingly difficult to distinguish between what is real and what is fake, leading to a widespread erosion of trust.

- AI-on-AI Warfare: We may see a future where AI systems are used to both create and detect disinformation, leading to a constant arms race between attackers and defenders.

FAQ: Navigating the Age of AI Disinformation

- Q: Can I trust anything I see online anymore? A: Not without critical evaluation. Assume everything you see is potentially manipulated and verify information from multiple, reputable sources.

- Q: What can I do to protect myself from disinformation? A: Be skeptical, fact-check, and be aware of your own biases. Avoid sharing information without verifying it first.

- Q: Are there any tools that can help me detect AI-generated content? A: Several tools are available, but they are not foolproof. Use them as one part of a broader verification process.

- Q: What role do social media platforms have in combating disinformation? A: Platforms have a responsibility to invest in technologies and policies to detect and remove disinformation, but they also need to balance this with freedom of speech concerns.

The challenge of combating AI-fueled disinformation is immense, but it’s not insurmountable. By fostering media literacy, investing in detection technologies, and holding platforms accountable, we can mitigate the risks and protect the integrity of our information ecosystem. The future of truth depends on it.

What are your thoughts on the rise of AI-generated disinformation? Share your concerns and strategies in the comments below.